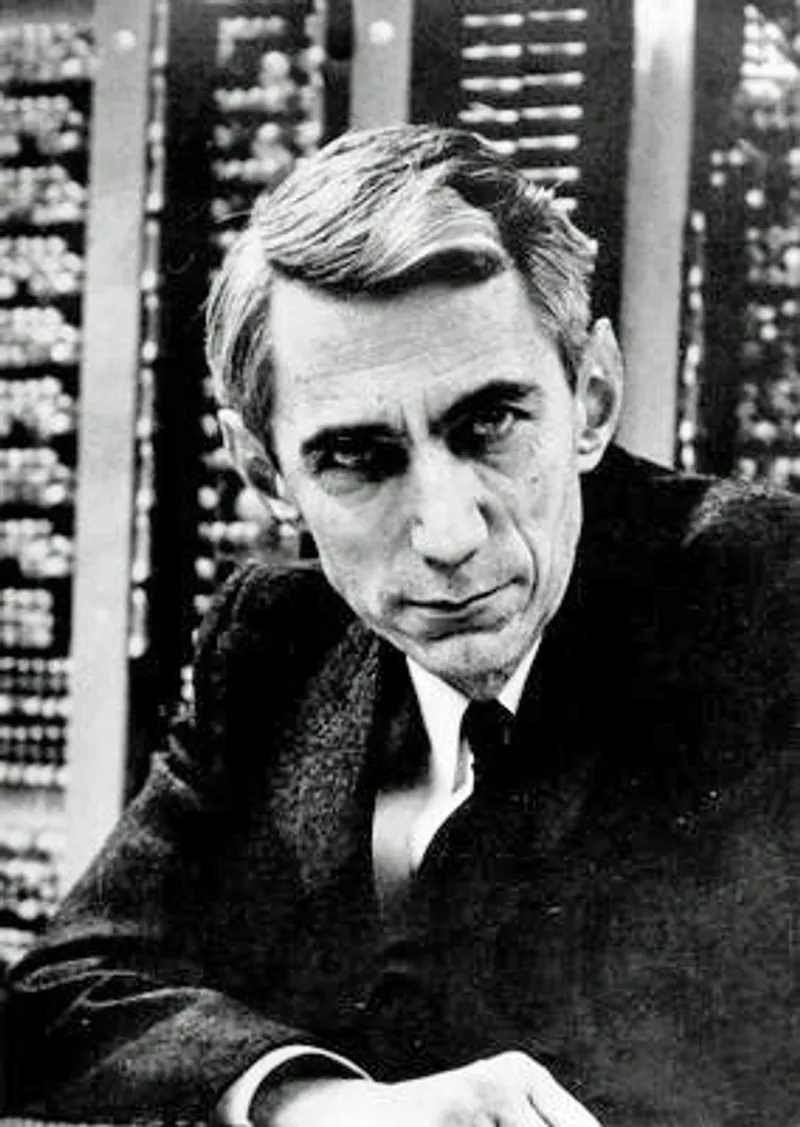

Claude Elwood Shannon (1916–2001) was an American mathematician, electrical engineer, and cryptographer known as the “father of information theory.” His work laid the theoretical foundations for the digital age, establishing the mathematical framework for communication, computation, and data storage that underlies virtually all modern technology.

Early Life and Education

Shannon was born on April 30, 1916, in Petoskey, Michigan. His father was a businessman and judge; his mother was a language teacher who became principal of Gaylord High School. Growing up in Gaylord, Shannon showed an early aptitude for mechanical and electrical tinkering, building model airplanes, a radio-controlled boat, and even a barbed-wire telegraph system to a friend’s house half a mile away.

In 1932, Shannon entered the University of Michigan, where he earned dual degrees in electrical engineering and mathematics in 1936—a combination that would prove prophetic for his later work bridging both fields.

The Most Important Master’s Thesis

Shannon moved to MIT in 1936 to work as a research assistant to Vannevar Bush on the differential analyzer, the most advanced calculating machine of the time. While servicing the machine’s relay circuits, Shannon recognized that the two-valued logic of switches corresponded to George Boole’s symbolic logic.

His 1937 master’s thesis, “A Symbolic Analysis of Relay and Switching Circuits,” demonstrated that Boolean algebra could be used to design and simplify electrical circuits[1]. This insight—that logical operations could be implemented in hardware—became the theoretical foundation of digital computing. Computer scientist Herman Goldstine called it “surely one of the most important master’s theses ever written.”

Bell Labs and Information Theory

After completing his PhD in mathematics at MIT in 1940, Shannon joined Bell Labs in 1941. During World War II, he worked on cryptography and fire-control systems, including the secure communication system used by Roosevelt and Churchill.

In 1948, Shannon published “A Mathematical Theory of Communication” in the Bell System Technical Journal[2]. This paper founded information theory by:

- Defining information mathematically through the concept of entropy

- Introducing the bit as the fundamental unit of information

- Proving the source coding theorem, establishing limits on data compression

- Proving the noisy channel coding theorem, showing that reliable communication over noisy channels is possible up to a fundamental limit

Historian James Gleick rated the paper as the most important development of 1948—more significant even than the transistor. Scientific American called it the “Magna Carta of the Information Age.”

The Playful Genius

Shannon was known for approaching research with curiosity, humor, and play. At Bell Labs, he famously rode a unicycle through the hallways while juggling. His playful inventions included:

- Theseus (1950): An electronic mouse that could navigate a maze and learn from experience, an early demonstration of machine learning

- Chess-playing machines: Pioneering work that helped establish artificial intelligence

- The Ultimate Machine: A box whose sole function was to turn itself off when switched on

Shannon also wrote papers on juggling and designed a juggling robot, and he built a calculator that operated in Roman numerals.

Later Career and Legacy

Shannon joined MIT’s faculty in 1956 while maintaining ties to Bell Labs. He received numerous honors, including the National Medal of Science (1966), the Kyoto Prize, and the IEEE Medal of Honor.

Shannon developed Alzheimer’s disease in his later years and died on February 24, 2001, at age 84. His achievements are often compared to those of Einstein, Newton, and Darwin.

Roboticist Rodney Brooks declared Shannon “the 20th century engineer who contributed the most to 21st century technologies.” The AI language model Claude was named in his honor[3].

Sources

- Wikipedia. “A Symbolic Analysis of Relay and Switching Circuits.” Details Shannon’s master’s thesis.

- Wikipedia. “A Mathematical Theory of Communication.” Overview of Shannon’s information theory paper.

- Quanta Magazine. “How Claude Shannon Invented the Future.” Assessment of Shannon’s lasting impact.